Imagine you have a magic notebook that can answer any question you write in it. It doesn’t just understand questions like “What’s 2+2?” but also tricky ones like “What should I draw today?” The way you ask your question in the notebook makes a big difference in what kind of answer you get. This is a bit like playing a game where you have to ask the right way to win the best answers. This game is called “prompt engineering.”

Let’s say you want the notebook to tell you a story about a dragon. If you write, “Tell me a story,” the notebook might give you any story—it could be about a bunny, not a dragon. But if you write, “Tell me a story about a brave dragon,” you’re more likely to get the dragon story you want. You were smart about how you asked, and that’s what prompt engineering is all about!

Sometimes, you might even tell the notebook a couple of stories you already know and like. You can say, “I love stories about dragons and knights. Now, tell me another story like that.” By doing this, you’re helping the notebook understand even better what kind of story you want. It’s like giving hints before asking your big question.

Prompt engineering is about learning the best way to ask questions to Artificial Intelligence tools such as ChatGPT and Llama2, so they understand exactly what you’re looking for, whether it’s a story, an answer to a question, or help with homework. This post will include many practical exercises that you can follow along, to better assist in understanding the basic concepts of prompt engineering.

Large Language Models

Envision having an immense library that houses every book, article, website, and message from across the globe. This library is so big that no person could read everything in it in their entire lifetime. But what if you had a super-smart robot friend who could read all of it extremely fast and remember everything? This robot friend is called a large language model (LLM), such as ChatGPT or Llama2.

LLMs are really good at two things: understanding what you’re asking because it has read so much and making up new sentences that sound like they could be in a book or on a website. When you ask it something, it thinks about everything it has read and tries to come up with the best answer or story based on that information.

The way it does this is a bit like playing with LEGO blocks. Each word or idea it knows is like a LEGO piece. When you ask it a question, it starts building an answer by snapping these LEGO pieces together in a way that makes sense. Sometimes, it builds something simple, like a small house of words. Other times, it builds a big castle with lots of details, depending on what you ask for.

But remember, LLMs are not perfect. Sometimes they make mistakes or get things mixed up because it’s trying to guess the right thing to say. It’s like when you’re telling a story and you accidentally say a cat barked instead of meowed—it’s not because you don’t know, but because you’re trying to remember and sometimes get things a little wrong.

ChatGPT

ChatGPT is an advanced artificial intelligence (AI) language model developed by OpenAI, designed to generate human-like text based on the input it receives. It functions as part of the larger family of language models that have been trained on a diverse dataset comprising books, websites, and other textual materials to understand and produce language across a wide range of topics and styles. ChatGPT is free to use and can be accessed by creating an account on the OpenAI website and logging in.

The primary function of ChatGPT is to process and interpret user queries, generating responses that mimic human conversational patterns. This capability allows it to engage in dialogue, answer questions, provide explanations, and even create content on demand, such as stories, essays, or code snippets. ChatGPT’s training involves understanding context, intent, and the nuances of language, enabling it to produce relevant and coherent responses.

Here is an example of how you can interact with ChatGPT to create a cybersecurity learning plan for yourself, dividing tasks and learning objectives over 8 months:

Zero-shot Prompting

Zero-shot prompting is a technique used in natural language processing where a model generates a response or performs a task without having been provided any specific examples related to that task beforehand. Essentially, it’s about asking the model to do something it hasn’t been explicitly prepared for, relying solely on its pre-trained knowledge and understanding.

In the context of cybersecurity, let’s say you’re interested in understanding a complex concept like “phishing.” A zero-shot prompt to a language model might be formulated as follows:

“Explain what phishing is and how it can be identified.”

Given this prompt, the model uses its general understanding of cybersecurity (gained during its training on a vast corpus of text) to explain phishing. It would likely describe phishing as a type of online scam where attackers impersonate legitimate organizations via email, websites, or other means to steal sensitive data from individuals, such as login credentials or financial information. The model might further elaborate on common identifiers of phishing attempts, such as suspicious email addresses, urgent or threatening language, and links to fake websites.

Few-shot Prompting

Few-shot prompting is a method where a language model is given a small number of examples (shots) to help it understand the task it needs to perform. This approach effectively ‘primes’ the model by showing it instances of the desired output, enabling it to generate similar responses or perform related tasks. It’s particularly useful when you want the model to follow a specific format or tackle a topic it might not excel in naturally.

Example: Study Tips

Let’s say you want to ask a language model for study tips, but you want these tips to be specific, like using visualization techniques for memory improvement and taking regular breaks to enhance focus. You can provide a few examples of study tips that match your criteria as part of your prompt.

Prompt to the model:

“To help improve study habits, here are a few tips:

- Use mnemonic devices to memorize lists.

- Study in short bursts of 25 minutes followed by a 5-minute break.

Based on these examples, can you provide more study tips that could help students retain information and maintain concentration?”

By providing a few specific examples of the kind of study tips you’re interested in, you’re guiding the model to understand not just the content of your request but also the format and context in which you want the information presented. The model, drawing on the structure and substance of your examples, is then primed to generate additional study tips that align with your initial inputs, such as recommending visualization techniques for better memory retention and suggesting regular, short breaks to keep the mind fresh and focused.

Chain of Thought (CoT) Prompting

Chain of Thought (CoT) prompting is an advanced technique used with language models to solve complex problems or answer intricate questions. This approach involves explicitly asking the model to “think out loud” or detail its reasoning process step by step before arriving at a final answer or solution. By doing so, CoT prompting helps the model navigate through a series of logical deductions, much like a human would when trying to understand a problem or explain a concept. This method is particularly useful for tasks requiring critical thinking, deep understanding, or multi-step reasoning.

Example: Analyzing a Cybersecurity Incident

Let’s apply CoT prompting to analyze a hypothetical cybersecurity incident, such as a breach involving suspicious network activity.

Prompt to the model: “A company has detected unusual traffic patterns on its network, suggesting a potential security breach. Step by step, analyze how this situation should be investigated to identify the cause and mitigate the threat.”

In this example, the CoT prompting guides the language model through a logical, step-by-step analysis of the cybersecurity incident, mirroring a human expert’s thought process. This approach not only aids in generating a comprehensive response but also makes the reasoning transparent, allowing users to follow and understand the model’s thought process, ultimately leading to more informed and effective solutions.

Prompt Chaining

Prompt chaining is a technique used with language models where a complex task is broken down into smaller, more manageable tasks or questions. These smaller tasks are addressed sequentially, with the output of one prompt serving as the input or context for the next. This method allows for a more refined and precise exploration of a topic or problem, especially when dealing with complex subjects that require a step-by-step approach.

Example: Understanding a Cybersecurity Threat

Let’s use prompt chaining to understand and address a cybersecurity threat, such as a ransomware attack. The goal is to gather information about the attack, understand how it operates, and then generate recommendations for preventing such attacks in the future.

First Prompt: “What is ransomware? Please answer in a single paragraph”

The model explains ransomware as a type of malicious software that encrypts the victim’s files, demanding a ransom to restore access.

Second Prompt (using information from the first): “How does ransomware infect a computer?”

Based on its understanding, the model might describe common infection methods, such as phishing emails or exploiting security vulnerabilities.

Third Prompt (building on the previous responses): “What are the best practices for protecting against ransomware attacks?”

Here, the model can leverage its prior explanations to offer detailed preventive measures, like maintaining up-to-date software, backing up important data, and educating users on recognizing phishing attempts.

Through prompt chaining, you guide the language model through a series of interconnected questions, each building on the last. This method allows for a deeper dive into the subject matter, enabling not only a broader understanding but also the generation of more nuanced and comprehensive responses. In the case of cybersecurity, it helps unpack complex issues step by step, making it easier to grasp the nature of the threat and how to mitigate it effectively. Let’s try utilizing the prompt chaining technique with a local AI model that is private to our personal laptop.

Llama2

Llama2 (Large Language model Meta AI 2) is an open-source language model developed by Meta (formerly Facebook). It represents a significant step in the field of artificial intelligence (AI) by offering a robust alternative to proprietary models like OpenAI’s GPT series. The decision to make Llama2 open-source aligns with Meta’s goal of democratizing access to cutting-edge AI technology, enabling researchers, developers, and organizations around the world to innovate and contribute to the advancement of natural language processing (NLP).

The architecture of Llama2 builds upon the successes of its predecessors, incorporating advanced machine learning techniques to understand and generate human-like text. It has been trained on a diverse dataset, encompassing a broad range of internet text. This extensive training enables Llama2 to perform a wide array of tasks, from answering questions and summarizing texts to generating content and engaging in conversations.

Llama2’s release into the open-source domain marks a pivotal moment in the AI landscape, potentially accelerating progress and fostering a more inclusive and collaborative environment for AI research and development.

Difference Between ChatGPT and Llama2

Llama2 and ChatGPT, while both are advanced language models capable of generating human-like text, differ significantly in their accessibility and deployment capabilities. The most notable difference lies in Llama2’s open-source nature, developed by Meta. This characteristic allows developers, researchers, and organizations to download, modify, and run the model locally on their servers or personal computers.

This flexibility enables a wide range of customized applications and allows for greater control over data privacy and security, as the processing can occur entirely in-house without necessitating an internet connection to external servers. Conversely, ChatGPT, developed by OpenAI, primarily operates as a proprietary model offered via a cloud-based service, which might limit customization options and requires data to be sent to OpenAI’s servers for processing. The distinction between the two models emphasizes Llama2’s appeal for those seeking open-source alternatives with the capability for local deployment, fostering innovation and personalized AI solutions within the bounds of privacy and security preferences.

Ollama

We can begin by installing a tool called Ollama to run large language models locally. One of the primary advantages of running models locally, as opposed to using cloud-based services (such as ChatGPT), is the enhanced privacy of your data. When you process data on your local machine or server, it doesn’t need to be transmitted over the internet to a third-party server for processing. This means that sensitive information remains within the confines of your own infrastructure, reducing the risk of data breaches, unauthorized access, or exposure during transmission. Local processing thus offers a layer of security and privacy that is particularly valuable for handling confidential or sensitive data.

Installing Ollama

To install Ollama, browse to their official download page, download the application, and double-click it to start the installation process. Ollama is available for macOS, Linux, and Windows (Note: The Windows application is only available in preview mode).

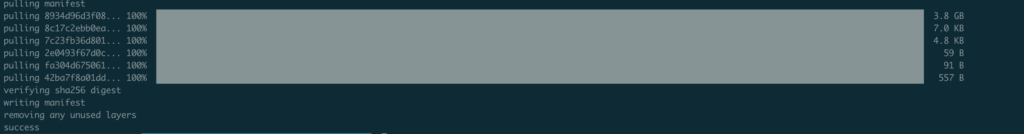

Once Ollama is installed, we can download Llama2 (or any other open model like Mistral or Gemma) to our local machine by running the following command on your terminal or command line (Note: the size of the model is around 3.8 GB):

$ ollama pull llama2

Once the model has been downloaded, we can start interacting with it by executing the following command on the command line:

ollama run llama2After running this command, you will find yourself at the chat prompt for Llama2 (Note: To exit the prompt, type in /exit). Let’s retry our example of understanding ransomware using Llama2 on our local machines:

Key Takeaways

Prompt engineering is a critical and evolving aspect of interacting with AI and language models, offering a gateway to unlocking their vast potential. By carefully crafting prompts, users can guide these models to generate more accurate, creative, or contextually relevant outputs. This nuanced dialogue between human input and AI output not only enhances the effectiveness of the models but also pushes the boundaries of what they can achieve, from solving complex problems to generating insightful content across various domains. As we continue to explore and refine the art of prompt engineering, we are essentially participating in a collaborative dance with AI, where each step and turn leads to deeper understanding and more meaningful interactions.

Moreover, the field of prompt engineering is rich with strategies to maximize the utility and adaptability of AI models. Here are a few useful tips for effectively engaging with these systems:

- Start with clear and concise prompts: Simplicity often leads to better understanding and results.

- Use examples or few-shot learning when possible: Providing context or examples can significantly improve the model’s performance on specific tasks.

- Iterate and refine your prompts: Experimentation and tweaking can help discover the most effective way to communicate your needs to the model.

- Employ prompt chaining for complex queries: Breaking down a complex request into simpler, sequential prompts can yield more comprehensive and accurate responses.

- Specify the desired response format: Clearly indicate if you want the answer in a specific format, such as a list, a detailed explanation, bullet points, or a concise summary. This helps in aligning the model’s output with your expectations.

- Set the tone or style: Inform the AI of the desired tone for its response, whether it’s formal, casual, humorous, or professional. This is especially useful in content creation, ensuring the output matches the intended audience’s expectations.

- Request examples or analogies: If you’re dealing with complex subjects, asking the AI to include examples, analogies, or metaphors can make the information more accessible and easier to understand.

- Limit the scope: If you’re looking for specific information, explicitly stating the scope or boundaries of the response can prevent the model from providing too broad or unrelated information.

- Ask for reasoning or explanations: Especially in educational contexts or when exploring new topics, prompting the AI to include explanations for its answers or to “show its work” can offer deeper insights and enhance understanding.

- Leverage Expertise in Interactions: Significantly enhance the quality of responses from AI language models by prefacing your prompts with a declaration of your expertise in the relevant field. By specifying your expertise and the depth of information you’re seeking, the AI model is more likely to provide a detailed, technical response that delves into sophisticated aspects, rather than a broad, surface-level explanation. This method tailors the interaction to yield insights that are more relevant and applicable to advanced study or professional applications:

Prompt Without Expertise: “Explain phishing attacks.”

Prompt Indicating Expertise: “As a cybersecurity expert focusing on digital fraud mechanisms, I’m researching advanced tactics in phishing attacks. Could you detail the latest methodologies used in spear phishing and the psychological triggers exploited in such attacks?”

As we delve deeper into the capabilities of language models, prompt engineering remains a cornerstone of our journey, bridging the gap between human curiosity and AI’s expansive knowledge reservoir. This evolving discipline not only enhances our interactions with AI but also pushes the boundaries of what these sophisticated models can achieve, transforming vast data landscapes into tailored insights and creative outputs. By mastering the art of prompt engineering, we unlock new realms of possibility, from solving complex challenges to generating innovative ideas, all while steering the AI in directions that most align with our goals and values. As technology progresses, the role of prompt engineering in shaping the future of AI and its integration into every facet of our lives becomes increasingly evident, marking it as an essential skill for the modern digital explorer.

No responses yet