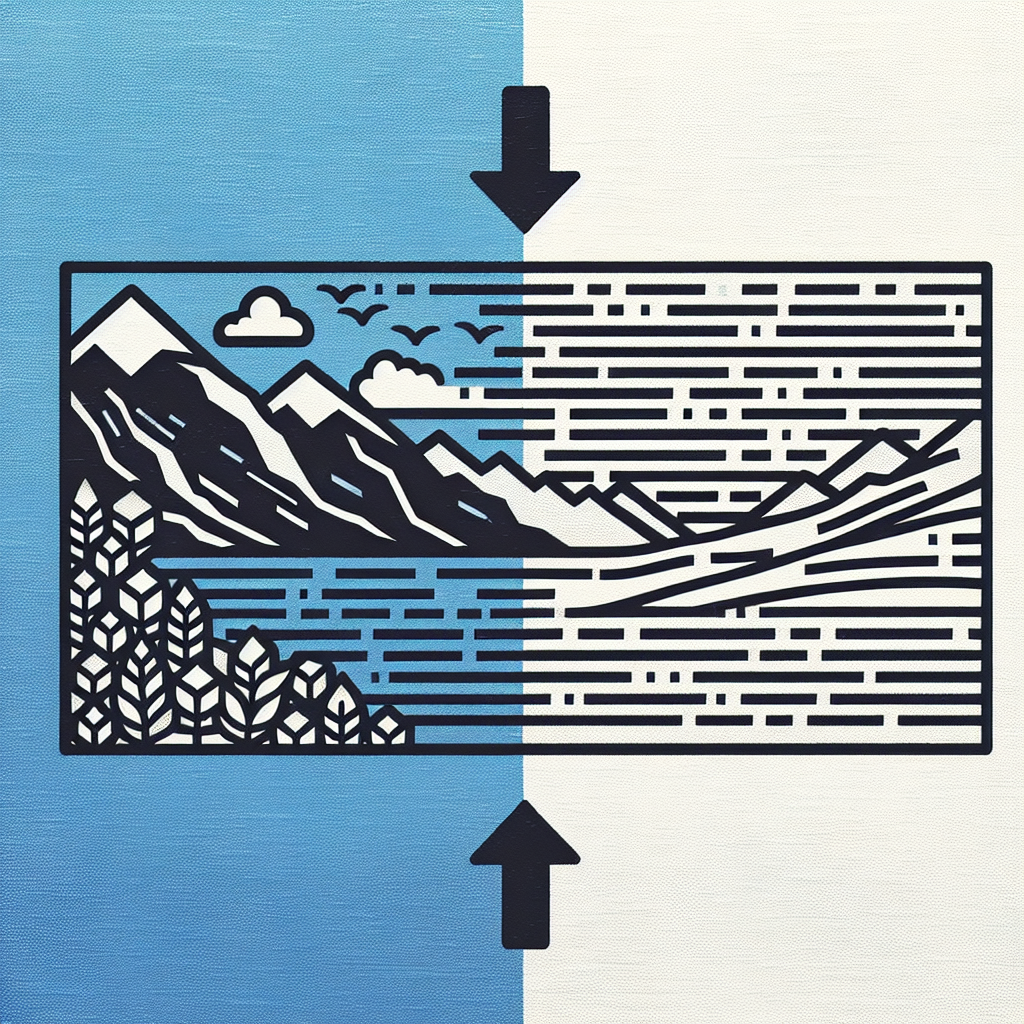

When you look at a photo, your brain instantly processes its meaning and connects it to related memories and ideas. Modern AI systems can now perform a similar feat by analyzing images and finding relevant text passages that match their content. This approach, known as image-to-text semantic search, transforms the traditional way we connect visual and written information. Rather than manually describing every image with keywords, these systems use neural networks to understand the visual elements and automatically find matching text descriptions, stories, or documents. A photo of a stormy coastline might lead you to passages about maritime adventures, while a picture of a cozy fireplace could surface descriptions of winter evenings – all without relying on predetermined tags or labels.

Prerequisite Knowledge

- Langchain -> https://python.langchain.com/docs/tutorials/

- Milvus -> https://milvus.io/docs/quickstart.md

- Ollama -> https://ollama.com/

- Python3/Jupyter Notebooks -> https://docs.python.org/3/tutorial/index.html

- Vector Embeddings -> https://milvus.io/intro

Semantic Search Of Images

A photo of a wizard in gray robes gets transformed by AI into a caption like “An elderly wizard with a long beard wearing flowing gray robes stands with his staff in his hand”. This caption then acts as a search query, finding semantically similar text passages in a database – perhaps matching with book excerpts describing Gandalf’s journey through the Misty Mountains or other scenes featuring wise travelers in remote locations.

The system doesn’t just match exact words; it understands the deeper meaning and context of both the image and text, connecting them through their semantic similarity. This allows the search to find relevant passages even if they don’t use the exact same words as the caption.

Image to Text Search Tool

Let’s create a new Jupyter Notebook to demonstrate how to do image-to-text semantic search using Python3, Langchain, and Milvus. Much of this notebook will be similar to the text-to-text search tool that was demonstrated in a previous post, with a few subtle changes.

Ollama setup

Before starting this semantic search project, you need to properly set up Ollama on your local system. First, download and install Ollama from the official website, which is available for MacOS, Windows, and Linux. After installation, you’ll need to download the nomic-embed-text and LLaVA models.

ollama pull nomic-embed-text

ollama pull llavaDownloading A Book For Semantic Search

To test our semantic search, we’ll use 'The Fellowship of the Ring' as our sample text. You can download a text version of this book from the Internet Archive. Once downloaded, extract the contents of the file if it is compressed.

macOS

Using Archive Utility:

- Right-click the

.gzfile. - Select Open With > Archive Utility.

Using Terminal:

- Open Terminal from Applications > Utilities.

- Navigate to the directory containing the

.gzfile usingcdcommand. - Run

gunzip j-r-r-tolkien-lord-of-the-rings-01-the-fellowship-of-the-ring-retail-pdf_hocr_searchtext.txt.gzto extract the file.

Linux

Using Terminal:

- Open Terminal .

- Navigate to the directory containing the

.gzfile usingcdcommand. - Run

gunzip j-r-r-tolkien-lord-of-the-rings-01-the-fellowship-of-the-ring-retail-pdf_hocr_searchtext.txt.gzto extract the file.

Using GUI:

- Right-click the

.gzfile. - Select Extract Here or Extract to.

Windows

Using 7-Zip:

- Download and install 7-Zip.

- Right-click the

.gzfile Gz File in Linux. - Select 7-Zip > Extract Here.

Once the file has been extracted, move it to the same directory as your Jupyter Notebook and rename it to fellowship-of-the-ring.txt.

Setting up the environment with required libraries

Next, we need to install the required packages.

!pip install -qU langchain langchain-community langchain-ollama langchain_milvus ollama This command ensures that the latest versions of these packages are installed in your Python environment, which are necessary for the rest of the code in the notebook to run properly.

Import Necessary Libraries

# Import and initialize Ollama embeddings model

from langchain_ollama import OllamaEmbeddings

from langchain_milvus import Milvus

from langchain_community.document_loaders import TextLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

import ollamaLoading The Document

loader = TextLoader("./fellowship-of-the-ring.txt")

text_documents = loader.load()This code snippet loads the content of a text file named “fellowship-of-the-ring.txt” into memory for further processing. It uses the TextLoader class from the Langchain library to create a loader object specifically designed to handle text files. The load() method is then called on this loader object, which reads the entire contents of the specified file and returns it as a list of document objects. Each document object typically contains the text content along with any metadata associated with the file. In this case, the loaded text, presumably the content of J.R.R. Tolkien’s “The Fellowship of the Ring,” is stored in the text_documents variable, making it available for subsequent operations such as text splitting, embedding generation, or semantic analysis.

Splitting The Text

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

documents = text_splitter.split_documents(text_documents)A text splitter is like a document chopper – it breaks down long texts into smaller, manageable pieces (chunks). The RecursiveCharacterTextSplitter creates chunks of text with these properties:

- Each chunk is approximately 1000 characters long (

chunk_size=1000) - Each chunk overlaps with the next chunk by 200 characters (

chunk_overlap=200)

Why Use Overlapping Chunks?

The overlap ensures that sentences or concepts that might be split between chunks aren’t lost. For example:

Original text: "Gandalf the Grey was a powerful wizard who helped the hobbits."

Chunk 1: "Gandalf the Grey was a powerful"

Chunk 2: "was a powerful wizard who helped"

Chunk 3: "wizard who helped the hobbits."

The overlap (shown in the repeated words) helps maintain context and prevents important information from being cut off at chunk boundaries. This is particularly important for semantic search, as it ensures that related concepts stay together even when the text is split.

The final line documents = text_splitter.split_documents(text_documents) applies this splitting process to your loaded text, creating a list of smaller document chunks that are easier to process and analyze.

Vector Database Setup

embeddings = OllamaEmbeddings(model="nomic-embed-text")

# Local Milvus Lite Instance

URI="./image-to-text.db"

# Init vector store

vector_store = Milvus(

embedding_function=embeddings,

connection_args={"uri":URI},

auto_id=True,

)

This code sets up the core components for turning text into searchable vectors. Let’s break it down:

Embedding Creation

embeddings = OllamaEmbeddings(model="nomic-embed-text")This line creates an embedding function using the “nomic-embed-text” model from Ollama. Think of this as a translator that converts words and sentences into numbers (vectors) that a computer can understand and compare.

Database Setup

URI="./image-to-text.db"This specifies where the vector database will be stored on your computer.

Vector Store Initialization

vector_store = Milvus(

embedding_function=embeddings,

connection_args={"uri":URI},

auto_id=True,

)This creates a new vector store using Milvus, which is like a special filing cabinet for vectors. It’s set up with:

- The embedding function we created earlier to convert text to vectors

- The location where it should store its data (the URI we defined)

auto_id=True, which means it will automatically assign unique IDs to each vector it stores

Upload The Documents To The Vector Store

vector_store.add_documents(documents)This line takes all the previously split text chunks (documents) and adds them to the Milvus vector store by converting each chunk into vector embeddings and storing them in the database for later searching.

Semantic Search

# The following code performs similarity search

results = vector_store.similarity_search_with_score(

"Wizard", k=3,

)

for res, score in results:

print(f"* [SIM={score:3f}] {res.page_content}")

print('-----------')This code performs a semantic search for the word “Wizard” in the stored text, retrieving the 3 most similar passages (k=3), and then prints each result with its similarity score. The loop displays each matching text passage along with a numerical score showing how closely it matches the search term:

The interpretation of similarity scores depends on the specific similarity metric being used, but generally:

For cosine similarity and dot product:

- A score closer to 1.0 indicates higher similarity

- A score closer to 0 indicates lower similarity

For distance-based metrics (like Euclidean distance):

When creating a collection in Milvus, the default metric type is "COSINE" if not explicitly specified.

Image Captioning

Now that our vector database has been created, we need to do the following:

- Load an image that we want to use for our image-to-text query.

- Caption the image using LLaVA.

- Query the vector database using the caption generated in the previous step.

Downloading an Image

Download an image of gandalf online and save it to a file named gandalf.png in the same directory as your Jupyter notebook.

Captioning the image

Use Ollama and LLaVA to create a caption for the image:

image_path = './gandalf.png'

response = ollama.chat(

model="llava",

messages=[

{

'role': 'user',

'content': 'Describe this image in a single detailed sentence.',

'images': [image_path],

}

]

)

caption = response['message']['content']

print(caption)The output of image captioning will vary from person to person but will look similar to the following:

In the image, renowned actor Ian McKellen, embodying his iconic character Gandalf from the Lord of the Rings trilogy, is seen standing amidst a grassy field with a backdrop of majestic mountains. Dressed in his signature wizard attire and holding a staff, he appears to be on an epic journey, gazing off into the distance.Query The Vector Database

Finally, we can query the vector database using the image caption from the previous step:

results = vector_store.similarity_search_with_score(

str(caption), k=3,

)

for res, score in results:

print(f"* [SIM={score:3f}] {res.page_content}")

print('-----------')The results should contain passages mentioning Gandalf:

* [SIM=0.517461] ‘I will look,’ said Frodo, and he climbed on the pedestal and bent over the dark water. At once the Mirror cleared and he saw a twilit land. Mountains loomed dark in the distance against a pale sky. A long grey road wound back out of sight. Far away a figure came slowly down the road, faint and small at first, but growing larger and clearer as it approached. Suddenly Frodo realized that it reminded him of Gandalf. He almost called aloud the wizard’s name, and then he saw that the figure was clothed not in grey but in white, in a white that shone faintly in the dusk; and in its hand there was a white staff. The head was so bowed that he could see no face, and presently the figure turned aside round a bend in the road and went out of the Mirror’s view. Doubt came into Frodo’s mind: was this a vision of Gandalf on one of his many lonely

-----------

* [SIM=0.639687] In the wavering firelight Gandalf seemed suddenly to grow: he rose up, a great menacing shape like the monument of some ancient king of stone set upon a hill. Stooping like a cloud, he lifted a burning branch and strode to meet the wolves. They gave back before him. High in the air he tossed the blazing brand. It flared with a sudden white radiance like lightning; and his voice rolled like thunder.

‘ Naur an edraith ammen! Naur dan i ngaurhothF he cried.

There was a roar and a crackle, and the tree above him

390

THE FELLOWSHIP OF THE RING

-----------

* [SIM=0.664764] 368

THE FELLOWSHIP OF THE RING

snow; its great, bare, northern precipice was still largely in the shadow, but where the sunlight slanted upon it, it glowed red.

Gandalf stood at Frodo’s side and looked out under his hand. ‘We have done well,’ he said. We have reached the borders of the country that Men call Flollin; many Elves lived here in happier days, when Eregion was its name. Five-and- forty leagues as the crow flies we have come, though many long miles further our feet have walked. The land and the weather will be milder now, but perhaps all the more dangerous.’

‘Dangerous or not, a real sunrise is mighty welcome,’ said Frodo, throwing back his hood and letting the morning light fall on his face.

‘But the mountains are ahead of us,’ said Pippin. We must have turned eastwards in the night.’

-----------Conclusion

Image-to-text semantic search represents more than just a technical advancement – it’s a fundamental shift in how we connect visual and written information. By bridging these two worlds, we’re moving beyond the limitations of traditional keyword matching and manual tagging. As the technology continues to evolve, we’ll see even more sophisticated applications, from enhancing digital libraries to improving content recommendation systems. The ability to seamlessly move from visual content to relevant textual information opens new possibilities for content discovery, research, and digital storytelling. Whether you’re a developer implementing these systems or a user benefiting from their capabilities, image-to-text search is reshaping how we interact with and understand our growing digital content libraries.

Full Code

# Import and initialize Ollama embeddings model

from langchain_ollama import OllamaEmbeddings

from langchain_milvus import Milvus

from langchain_community.document_loaders import TextLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

import ollama

loader = TextLoader("./fellowship-of-the-ring.txt")

text_documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

documents = text_splitter.split_documents(text_documents)

embeddings = OllamaEmbeddings(model="nomic-embed-text")

# Local Milvus Lite Instance

URI="./image-to-text.db"

# Init vector store

vector_store = Milvus(

embedding_function=embeddings,

connection_args={"uri":URI},

auto_id=True,

)

vector_store.add_documents(documents)

image_path = './gandalf.png'

response = ollama.chat(

model="llava",

messages=[

{

'role': 'user',

'content': 'Describe this image in a single detailed sentence.',

'images': [image_path],

}

]

)

caption = response['message']['content']

print(caption)

results = vector_store.similarity_search_with_score(

str(caption), k=3,

)

for res, score in results:

print(f"* [SIM={score:3f}] {res.page_content}")

print('-----------')Full Code Without Langchain

from pymilvus import MilvusClient

import ollama

from typing import List, Dict, Optional, Generator

from pathlib import Path

def load_and_split_text(file_path: str, chunk_size: int = 1000, chunk_overlap: int = 200) -> Generator[str, None, None]:

"""Load and split text into chunks using a generator"""

try:

with open(file_path, 'r', encoding='utf-8') as file:

text = file.read(chunk_size)

while text:

# Adjust end to avoid splitting words

while len(text) > chunk_size and not text[chunk_size].isspace():

chunk_size -= 1

chunk = text[:chunk_size].strip()

# Yield chunk and read next part of file

yield chunk

text = text[chunk_size - chunk_overlap:] + file.read(chunk_size - chunk_overlap)

except Exception as e:

print(f"Error loading or splitting text: {e}")

def initialize_vector_store() -> Optional[MilvusClient]:

"""Initialize Milvus vector store"""

try:

milvus_client = MilvusClient(uri="./no-lang-image-to-text.db")

collection_name = "text_collection"

if milvus_client.has_collection(collection_name):

milvus_client.drop_collection(collection_name)

milvus_client.create_collection(

collection_name=collection_name,

dimension=768,

metric_type="COSINE",

consistency_level="Strong",

auto_id=True

)

return milvus_client

except Exception as e:

print(f"Error initializing vector store: {e}")

return None

def store_text_chunks(milvus_client: MilvusClient, chunks: List[str]) -> None:

"""Store text chunks in vector store"""

try:

data = []

for chunk in chunks:

# Get embedding for text chunk

embedding_response = ollama.embeddings(

model='nomic-embed-text',

prompt=chunk

)

embedding = embedding_response['embedding']

data.append({

"vector": embedding,

"content": chunk

})

if data:

milvus_client.insert(

collection_name="text_collection",

data=data

)

print(f"Successfully stored {len(data)} text chunks")

except Exception as e:

print(f"Error storing text chunks: {e}")

def search_similar_text(image_path: str, milvus_client: MilvusClient, k: int = 3) -> None:

"""Search for similar text passages based on image caption"""

try:

# Get caption for image

response = ollama.chat(

model="llava",

messages=[{

'role': 'user',

'content': 'Describe this image in a single detailed sentence.',

'images': [image_path]

}]

)

caption = response['message']['content']

print(f"Image Caption: {caption}\n")

# Get embedding for caption

embedding_response = ollama.embeddings(

model='nomic-embed-text',

prompt=caption

)

query_embedding = embedding_response['embedding']

# Search for similar text passages

results = milvus_client.search(

collection_name="text_collection",

data=[query_embedding],

limit=k,

search_params={

"metric_type": "COSINE",

"params": {}

},

output_fields=["content"]

)

# Display results

for hit in results[0]:

print(f"Similarity Score: {hit['distance']:.3f}")

print(f"Text: {hit['entity']['content']}")

print("-----------")

except Exception as e:

print(f"Error during text search: {e}")

# Initialize vector store

milvus_client = initialize_vector_store()

# Load and split text

print("Loading and splitting text...")

chunks = load_and_split_text("./fellowship-of-the-ring.txt")

# Store text chunks

print("Storing text chunks...")

store_text_chunks(milvus_client, chunks)

# Search similar text based on image

print("\nSearching for similar text passages...")

search_similar_text('./gandalf.png', milvus_client, k=3)

No responses yet